Portraits

Diverse Scenes

Generate consistent subjects across individual creations

without reference, tuning,

and access to other creations.

While text-to-image generative models can synthesize diverse and faithful contents, subject variation across multiple creations limits the application in long content generation. Existing approaches require time-consuming tuning, need references for all subjects, or assume latent features of creations to be always accessible.

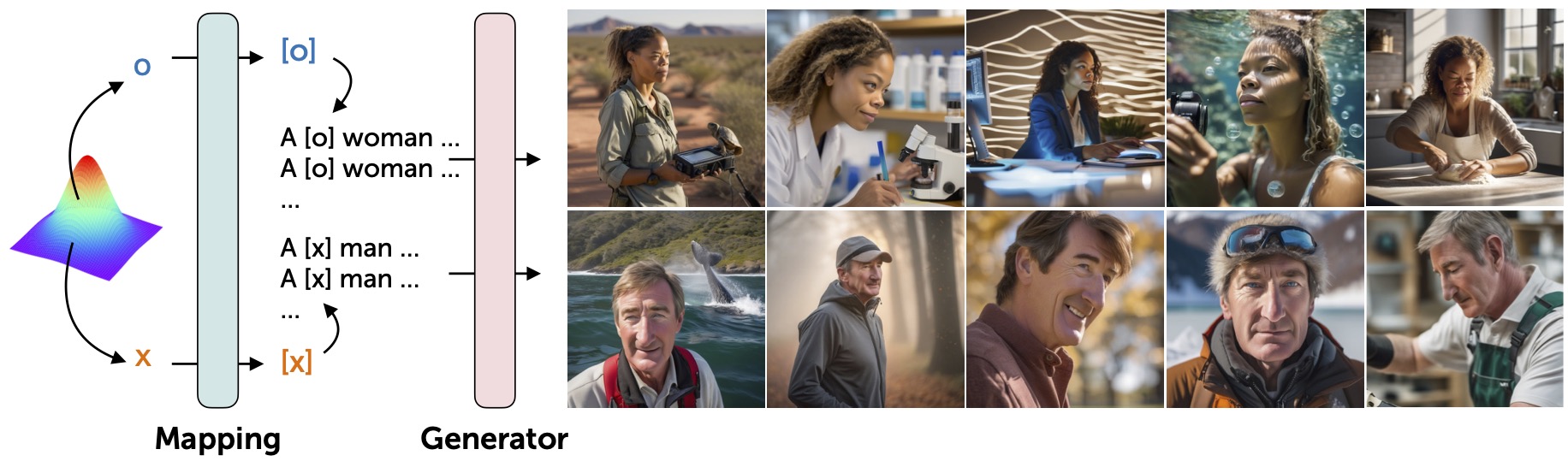

We introduce Contrastive Concept Instantiation (CoCoIns), a generation framework achieving subject consistency across multiple individual creations without tuning or reference. CoCoIns creates instances of concepts with a unique association that connects latent codes to subject instances. Given two latent codes (o and x), CoCoIns converts it into a pseudo-word ([o] and [x]) that decides the appearance of a subject concept. By reusing the same codes, users can consistently generate the same subject instances across multiple creations.

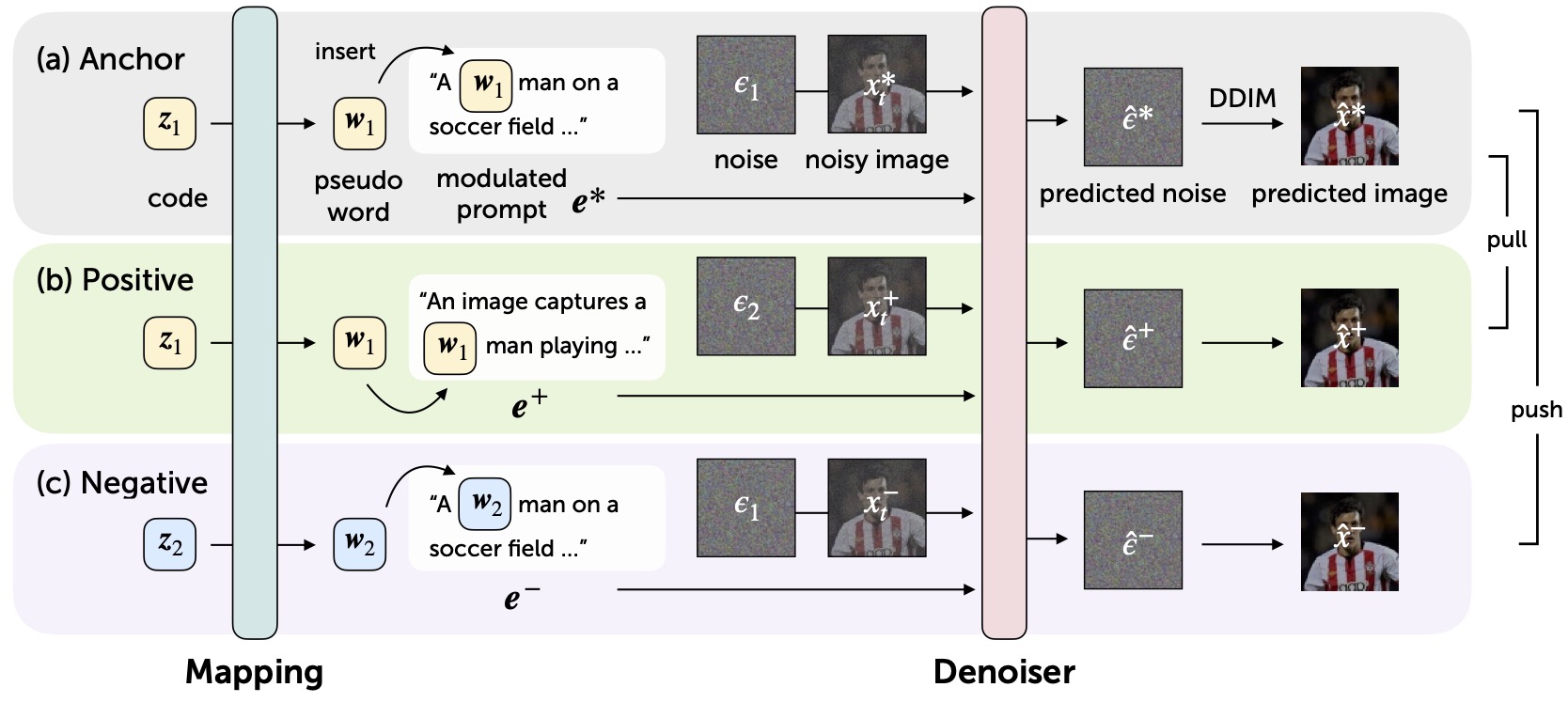

We develop a contrastive learning approach to build association between input latent codes and concept instances. For each training image, we generate two image descriptions and randomly sample two latent codes z1 and z2. The mapping network first transforms the two latent codes into pseudo-words w1 and w2. Then we collect a triplet of combinations of descriptions and latent codes. We build (a) an anchor sample with description embedding e* modulated by inserting w1 before target concept token, (b) a positive sample e+ with a similar description embedding modulated with w1, along with (c) a negative sample e- with the same prompt as the anchor but modulated with a different pseudo-word w2. The network is trained with a triplet loss to differentiate approximated images x*, x+, and x-, from the denoiser prediction ε*, ε+, and ε-.

In addtion to human faces, CoCoIns can be applied to multiple subjects or general concepts like animals.

@article{hsin-ying2025cocoins,

title={CoCoIns: Consistent Subject Generation via Contrastive Instantiated Concepts},

author={Lee Hsin-Ying and Kelvin C.K. Chan and Ming-Hsuan Yang},

journal={Transactions on Machine Learning Research},

year={2025},

}